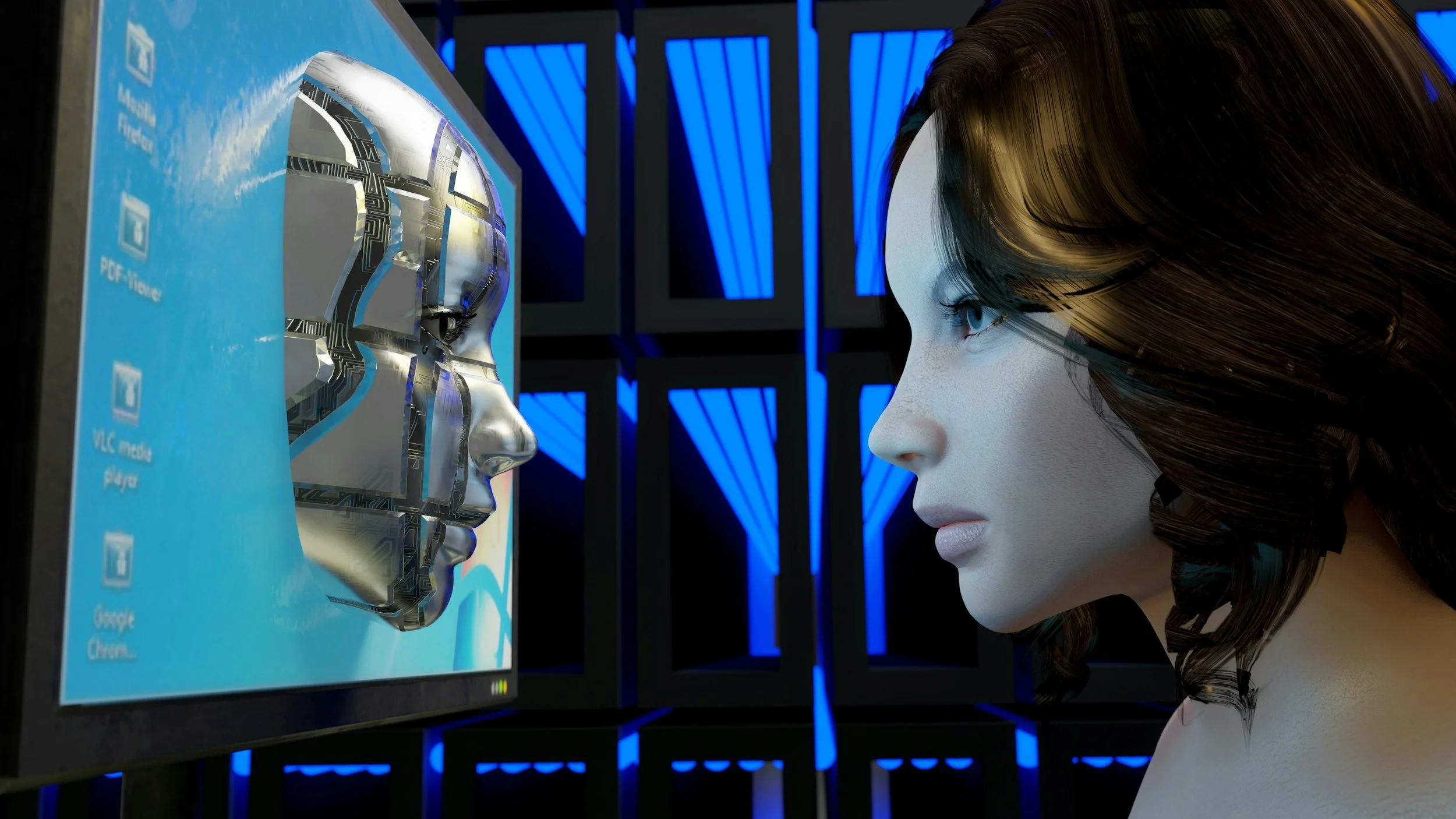

The Algorithmic Mirror: How AI Personalization Shapes Digital Culture and Identity

The Algorithmic Mirror. Video created by Nevada Gray Studio using Google Veo3

The Story of a Culture Trained by Algorithms

Digital culture no longer behaves like a collective audience. Every individual now receives a custom-curated reality from algorithmic recommendation engines across TikTok, Instagram Reels, YouTube, Spotify, and increasingly within news ecosystems. Personalization no longer enhances the media experience. Personalization defines the media experience. As Deloitte notes, social platforms function as entertainment hubs that deliver hyper-curated content ecosystems, guiding how user discovery unfolds and how culture evolves across generations (Widener et al., 2025). This shift has reconditioned expectations for instant relevance, zero-friction engagement, and adaptive storytelling personalized by each user’s behavioral data.

A surprising blind spot remains unaddressed. Personalization design treats user behavior as stable, although human identity fluctuates through crisis, curiosity, or life transitions. Algorithms misinterpret these shifts as permanent preference updates. This phenomenon, known as identity drift misclassification, produces content silos that unintentionally box users into narrow identity loops (Lu et al, 2022). Mass media previously created shared cultural reference points; algorithmic systems now fragment collective narratives into micro-worlds that shape individual identities. This shift has rewritten how society understands storytelling, credibility, and community. The algorithm has become a cultural co-author. This raises a critical question for communicators and UI/UX designers, as identity signals increasingly influence AI performance and return on investment (ROI), challenging how personalization systems can remain adaptive to human complexity without reducing individuals to static, monetizable data profiles (Olden, 2025).

TEDx Talks. (2025, May 23). Algorithms and identity: Are you truly in control? [Video]. YouTube. https://youtu.be/8Sco65VKpSU?si=qo3xDrpQlvCTIbCV

Real-World Case: TikTok as the Architect of Cultural Micro-Ecosystems

TikTok’s “For You Page” is perhaps the most influential generator of cultural fragmentation. The recommendation engine predicts not what users desire; rather, it promotes content that sustains attention within behavioral clusters. This dynamic produces communities like “BookTok,” and “FinanceTok,” where identity takes shape through tightly curated micro-cultures. These niche digital ecosystems cultivate belonging while recalibrating audience expectations toward immediacy and adaptive relevance. Users now seek identity-aligned content, creator-driven authenticity, algorithmic narrative velocity, and seamless, search-free discovery. As hyper-personalized media becomes the default experience, the traditional broadcast model recedes in favor of precision-engineered, emotionally responsive communication environments. For example, Taylor & Chen (2024) showed that TikTok’s personalization increases social connectedness through the curation of positively framed, identity-relevant content that users perceive as responsive to who they are, reinforcing earlier concerns that algorithmic identity recognition now functions as a primary social signal rather than a purely technical feature. A recent TEDx talk by Basra Mohamud (2025), reinforces concerns raised by TikTok’s identity-driven recommendation systems by showing how algorithmic curation shapes both content exposure and the ways individuals interpret and reinforce their digital identities within algorithmic-driven environments.

Stock Image: Unsplash

Shifting Culture, Shifting Roles: How Communication Careers Must Redefine Expertise

The contemporary communication workforce now functions as a data-driven discipline where professionals must translate algorithmic systems, personalization engines, and cultural dynamics into strategic narrative design. This evolution requires a new training model that includes Cognitive–AI Interaction Training, supported by Shanmugasundaram and Tamilarasu (2023), who show that algorithmic environments impose cognitive demands that necessitate literacy in cognitive design and mental-load management. Communicators must also develop proficiency in Ethical AI and Transparency Frameworks, reflecting Diaz Ruiz’s (2025) findings on the growing need for ethical advertising practices, misinformation mitigation, and oversight of automated distribution systems. A third competency, Generational Expectation Mapping, emerges from Pew Research Center, which reveals widening generational divides in trust and highlights the need for communicators to curate messages for audiences who evaluate credibility through differentiated cultural and digital lenses (Eddy & Shearer, 2025). Together, these training priorities position communicators to operate as strategic interpreters within personalized, algorithm-driven media ecosystems that reward agility, ethical clarity, and audience expectation.

Stock Image: Unsplash

Building Ethical Personalization Systems That Strengthen Culture

A future-ready ethical personalization model requires platform redesign that supports user agency, cultural resilience, and transparent algorithmic governance. Research on cognitive load within algorithmic environments by Shanmugasundaram and Tamilarasu (2023) demonstrates the value of identity reset controls that allow users to recalibrate recommendation systems and reduce identity-loop stagnation created through repetitive content exposure. Public trust improves when platforms introduce algorithmic transparency dashboards, which aligns with Diaz Ruiz’s (2025) evidence on misinformation risks within automated advertising and the need for visibility into personalization signals; TikTok’s published explanation of the platform’s ‘For You’ system provides an early model of this approach (TikTok, n.d.). Generational findings from Pew Research Center support the creation of cross-community discovery pathways that increase perspective diversity while maintaining personalized engagement. Media organizations strengthen cultural cohesion through shared narrative anchors that preserve collective memory and support more adaptive forms of digital storytelling (Eddy & Shearer, 2025).

Algorithmic personalization created a media environment that intended to feel intimate, responsive, and human, although this intimacy introduces new expectations and cultural consequences. Society now anticipates media systems that adapt at the pace of emotion, identity, culture, and curiosity. Communicators who understand these dynamics gain a strategic advantage in molding the next era of storytelling , an era defined by ethical personalization, cultural fluency, and human-centered communication design.

References:

Diaz Ruiz, C. A. (2025). Disinformation and fake news as externalities of digital advertising: a close reading of sociotechnical imaginaries in programmatic advertising. Journal of Marketing Management, 41(9/10), 807–829. https://doi.org/10.1080/0267257X.2024.2421860

Eddy, K., & Shearer, E. (2025, October 29). How Americans’ trust in information from news organizations and social media sites has changed over time. Pew Research Center. https://www.pewresearch.org/short-reads/2025/10/29/how-americans-trust-in-information-from-news-organizations-and-social-media-sites-has-changed-over-time/

Lu, C., Kay, J., & McKee, K. (2022). Subverting machines, fluctuating identities: Re-learning human categorization. In Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency (FAccT ’22) (pp. 1–11). Association for Computing Machinery. https://doi.org/10.1145/3531146.3533161

Olden, E. (2025, November 25). How identity may be the missing link in AI ROI. Forbes Technology Council. https://www.forbes.com/councils/forbestechcouncil/2025/11/25/how-identity-may-be-the-missing-link-in-ai-roi/

Shanmugasundaram, M., & Tamilarasu, A. (2023). The impact of digital technology, social media, and artificial intelligence on cognitive functions: a review. Frontiers in Cognition, 1–11. https://doi.org/10.3389/fcogn.2023.1203077

Taylor, S. H., & Chen, Y. A. (2024). The lonely algorithm problem: The relationship between algorithmic personalization and social connectedness on TikTok. Journal of Computer-Mediated Communication, 29(5), zmae017. https://doi.org/10.1093/jcmc/zmae017

TikTok. (n.d.). Responsible AI principles. TikTok Transparency Center. https://www.tiktok.com/transparency/en-us/responsible-ai-principles

Widener, C., Arbanas, J., Van Dyke, D., Arkenberg, C., Matheson, B., & Auxier, B. (2025). 2025 digital media trends: Social platforms are becoming a dominant force in media and entertainment. Deloitte Insights. https://www.deloitte.com/us/en/insights/industry/technology/digital-media-trends-consumption-habits-survey/2025.html

Nevada Gray Studio